Cloud Computing and Virtualization has received a lot of attention lately, largely due to a huge marketing push initiated by a number of big corporations hoping that peddling shared computing infrastructure solutions is the “next big thing.” If these advertising messages are to be believed, Cloud Computing and Virtualization provide increased reliability, reduced expenses and an unprecedented level of convenience for most I.T. applications.

Call me a technological heretic but I believe that virtualized cloud infrastructure guarantees neither of those things while taking away a lot of control over how your resources are allocated.

While shared infrastructure solutions offer a convenient and effective answer for certain I.T. challenges they are far from the “magic bullet” cure described by the Cloud Computing orthodoxy (whose ranks include cloud computing infrastructure service providers, I.T. consultants, hardware vendors and software developers).

Understanding the Roots of Cloud Computing

Contrary to what you may have heard recently, the concept of sharing processing power and storage space among multiple computers is neither new nor radical. Cloud Computing refers to nothing more than multiple systems, applications and users sharing a finite quantity of CPU, storage and operating environment. Shared data processing infrastructures have been utilized since the IBM 360 was first introduced nearly five decades ago in 1964. Since the release of the IBM 360, we’ve seen wave after wave of different shared computing solutions come and go – from mainframes to mini computers.

Now, mainframes and mini computers are still in use today, and they still offer effective solutions for a very specific set of tasks. Yet consumer demand sided with personal computers since the introduction of client-server architecture in the 1980s, leading mass-market software and hardware developers to spend the last few decades fixating on how to produce reliable and stable dedicated computing solutions. These developers all but perfected this architecture by the early 2000’s, and since then they’ve been searching high-and-low for the “next big thing” to focus on. Since the introduction of Virtualization, these developers seem to have decided this “next big thing” is Cloud Computing. The word “Cloud” which normally implies lack of transparency (can’t really see through it) had suddenly become a slick marketing concept for shared and virtualized IT infrastructure. Ouch ! Well, I hate to break it to you, folks: Cloud Computing is not exactly the “next big thing.” Cloud Computing is an old concept which has been around for half a century!

Problems with the Cloud-Based Infrastructure

Resource Bottlenecks

Imagine you have a server with 500 GB of hard drive space and a multi-core processor. Now imagine you aren’t the only user saving files to this computer. Imagine a dozen other users store their files on this hard drive. That 500 GB of hard drive space is going to fill up pretty quickly!

What’s worse, imagine you’ve put in a request to utilize a file or application stored on that hard drive and 7 other users have put in a similar read/write request just before you. You will need to wait for all those 7 other requests to resolve before you can access your file or launch your application. Read/write request queues create system slowdowns, and these queues are RAMPANT and NORMAL in shared storage solutions, widely utilized in Cloud Computing. The same rule and concepts apply to CPU, network and other elements of shared infrastructure.

Ability to Predict Usage Patterns

Most users believe that service provider has unlimited pool of computing resources. Other users believe they will be able to avoid these queues by minimizing their personal resource usage. Both of these strategies sound viable at first, until you realize the biggest problem with Cloud Computing- its unpredictability. It’s not only difficult to accurately predict your resource requirements from day to day, but it’s absolutely impossible to predict the resource usage of the tens, hundreds, or even thousands of other users competing for your shared, finite computational supply.

Cloud infrastructure providers often attempt to identify usage patterns among their clients and to create waves of resource allocation to match those patterns. This solution sounds promising at first, until you realize it is often impossible to predict changes in a seemingly “identified” usage pattern. A Cloud provider may be able to gain an idea of general usage patterns among their client base, but they NEVER know when a random usage spike may occur. These usage spikes can radically impact the resource allocation for every user on a Cloud network in a far-reaching manner.

Even with the best planning based on past performance, usage spikes in the Cloud infrastructure systems CAN BE UNPREDICTABLE and UNAVOIDABLE.

How Service Providers Try to Solve the Resource Problem

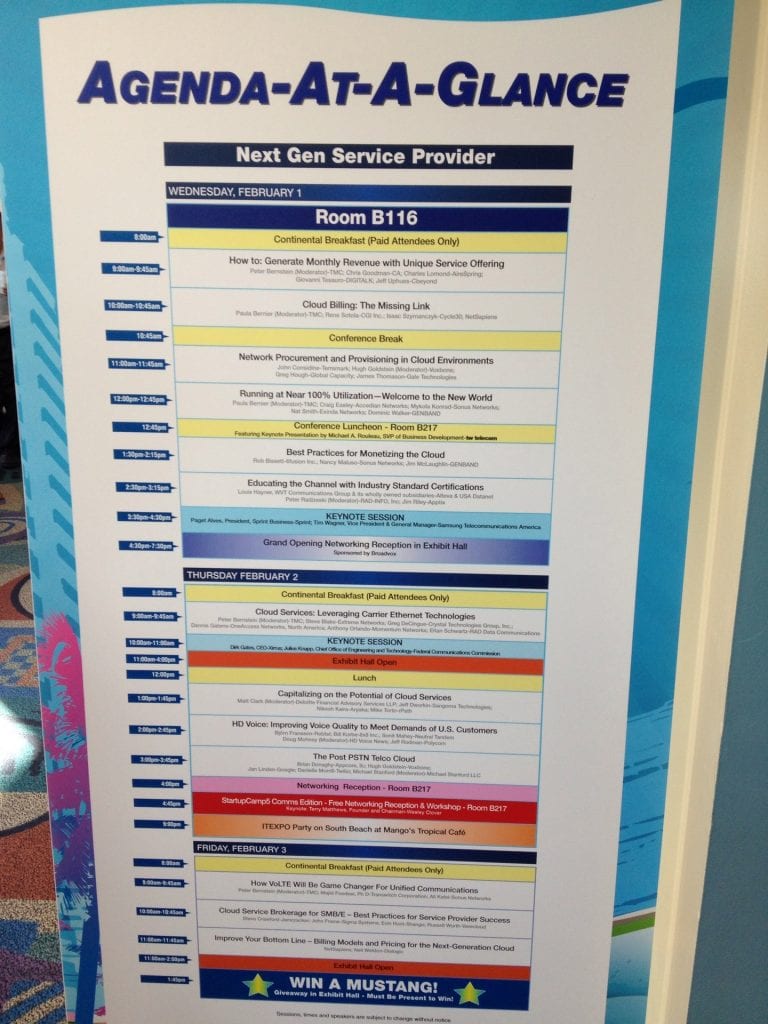

Companies offering Cloud Computing infrastructures are far from ignorant surrounding the major problems underlying their services, and they aren’t completely incapable of solving the problems intrinsic to shared computing solutions. These issues are widely recognized among industry experts and have been a subject of numerous discussions at ITEXPO which took place in Miami, FL in February, 2012. Just consider these sessions: “Running at Near 100% Utilization – Welcome to the New World”, “Unified Communications: Is the Public Cloud Your Enemy? “ among others.

Modern virtualization software allows Cloud Computing service providers to incrementally grow, distribute and, periodically, re-provision their actual physical infrastructure in order to compartmentalize its shared resources based on different service level commitments.

Adding physical hardware and software, however, carries the cost of induced system complexity, ultimately leading to diminishing returns per processing unit. Virtualization software has to do more work coordinating more hardware thus adding overhead. As a result overall system performance per processing unit decreases. The law of diminishing returns applies to all components making up virtual infrastructure: storage, i/o subsystem, processors, buses and network elements.

Service Compartmentalization otherwise referred to as “Capacity Management” doesn’t prevent service providers from oversubscribing their infrastructure and creating a situation where resource shortages are common place. Oversubscription in itself is an old concept particularly in in telecom industry. Traditional PSTN networks are designed around managed oversubscription assuming that all users connected to telephone ports will never use all or even half of the ports at the same time. But managing one type of finite resource is a lot easier than managing many.

Cloud Infrastructure Service providers have a conflict of interest in that in order to maintain the economies of scale they cannot afford to expand their infrastructure to fully guarantee all of their client’s needs. It simply would not fit their business model which relies on oversubscription.

How will Service Provider Treat Your Needs during Resource Shortage

- They can allocate their resources equally among their users to ensure everyone receives the same amount of processing speed.

- They can prioritize certain clients over others, ensuring some of their clients receive all the resources they require while others see their operations slow to a crawl.

Of these available solutions, Cloud Computing providers almost always choose option #2.

Cloud Computing providers never purchase enough infrastructure to meet all of their contractual obligations at all times because doing so would be incredibly expensive. If Google actually provided the full sum of the resources they’ve sold through their Cloud Computing services they would need a server field the size of Washington D.C.! In fact the larger the Cloud Infrastructure, the more oversubscribed it is.

Providers are equally unlikely to spread their resources equally among their clients. Doing so would ensure every one of their clients suffered impaired and unreliable service. Given the choice between upsetting ALL of their clients and upsetting SOME of their clients, Cloud Computing providers will always prefer the latter.

Which means, in the face of resource shortages, Cloud Computing providers will prioritize some of their clients over others, giving some of their clients all the resources they need while severely leaching those resources from the rest of their user base.

The Problem with Prioritization

Cloud Computing is often positioned as an effective form of computational resource and cost allocation. Yet the concept of prioritization is hardly fair when service does not meet SLA. If prioritization takes place within a shared solution then some users will receive the resources they require, while other users will be burdened with a smaller computational base than they signed up for. To make matters worse, which users receive prioritization and which users receive the short end of the stick is hardly random- Cloud service providers may give their most valuable users what they need in the face of surging demand by taking those resources directly from the users they consider to be less valuable.

The term “valuable” can sit a little nebulous. Let’s explain the problem of prioritization unambiguously:

- The more financially profitable you are to your Cloud provider, the more resources you will receive in the face of network limitations.

- The less financially profitable you are to your Cloud provider, the fewer resources you will receive in the face of network limitation.

How important is your business to your service provider?

Another side of Cloud Computing Appeal

This isn’t going to win me a lot of friends but in my opinion, the I.T. culture of the past two decades had created a new type of I.T. manager, the one for whom trying to pass the responsibility has become an established norm. Because when key infrastructure component fails or underperforms, there is now a service provider to point finger at. With cloud computing infrastructure, things become all too convenient: the responsibility for hardware, licensing, security, resource management, service levels is either shared or completely passed on to the service provider. With shared responsibility there is always a question of where the buck really stops and it usually isn’t with the paying customer.

Redundancy, Scalability, Performance

Cloud solutions have been offered as the solution to a truly massive set of problems, including problems which aren’t limited entirely to traditional computer usage. For example, Cloud solutions have been offered as the solution to creating a unified communications programs. But despite provider claims, Cloud based unified communication solutions are just as unfeasible as any other shared storage solution.

Redundancy, scalability and performance are three different elements driving the I.T. industry. The more redundant a system is, the more processing power that system uses to coordinate its resources. Redundancy, in essence, presents the classic law of diminishing returns. Increased redundancy and scalability leads to increased complexity. The more complex system become, the more computing power it takes just to govern its resources.

In the world of communications, Cloud-based unified communication services are often run on large, highly redundant shared computing platforms. At any given moment these platforms can be used to host clients requiring as few as 10 extension averaging 2 concurrent calls or to host larger clients such as call centers requiring 2000 extensions and averaging 300 concurrent calls. It’s impossible to say who you will be sharing your storage and processing resources with at any given moment. In this respect, Cloud voice solutions can mirror Cloud Computing solutions unless service provider actually allocates and does not share hosted PBX system resources that client is paying for.

The Bottom Line on Shared Solutions vs. Dedicated Hosted Solutions

In the vast majority of applications, dedicated hardware solutions are offer superior SLA to Cloud Computing solutions when it comes to reliability, prioritization and organizational control. This is as true for VoIP Hosted PBX systems as it is for more general-use computer systems. Depending on the scale, some dedicated solutions may actually cost more than Cloud infrastructure solutions, but you ultimately get what you pay for.

If your applications require response in true real time, if your organization can not risk suffering from diminished performance levels due to resource sharing, if you refuse to allow your resource requirements to be arbitrarily de-prioritized, then you need a dedicated hardware solution.